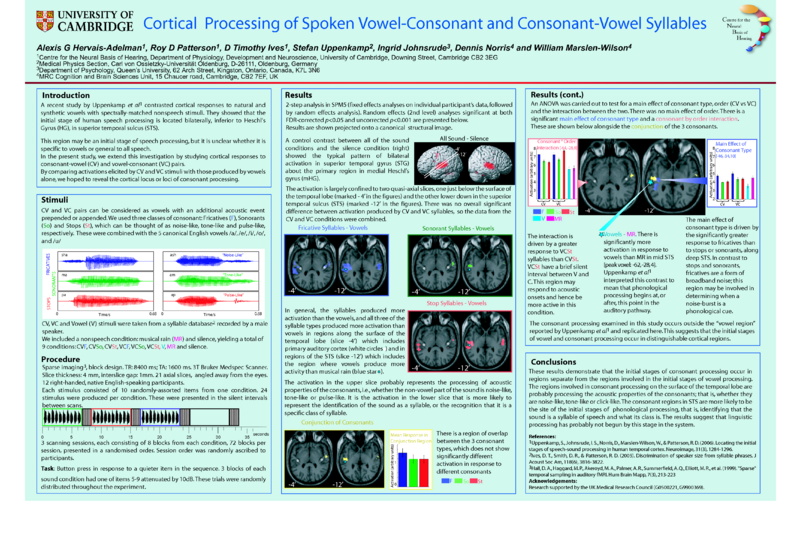

Cortical Processing of Spoken Vowel-Consonant and Consonant-Vowel Syllables

From CNBH Acoustic Scale Wiki

Uppenkamp et al. (2006) used fMRI to localize the initial stages of speech specific processing in the auditory system. They contrasted synthetic vowel sounds with acoustically matched nonspeech sounds and demonstrated that regions of the superior temporal gyrus (STG) and the superior temporal sulcus (STS) were more active in the presence of the vowel sounds.

The present study uses fMRI to investigate the processing of consonant-vowel (CV) and vowel-consonant (VC) syllables to determine the locus of consonant-processing in the human brain. We used 3 classes of consonant (fricatives, sonorants and stops) combined with 5 canonical English vowels (/a/, /e/, /i/, /o/, /u/), to create CVs and VCs. Vowels, a spectrally matched nonspeech condition, and silent intervals were included as controls. Stimuli were 6.8s strings of 10 pseudo-randomly assorted tokens from one condition. We used a sparse imaging design. Stimuli were presented during a 6.9s silent inter-scan interval (TA: 2.1s, TR: 9s). 14 normally-hearing, right-handed, native English speakers heard 8 stimuli from each condition in a randomised order, in 3 blocks of 72 scans.

Similarly to Uppenkamp et al. (2006), we found significantly enhanced activity in left STS for vowels over nonspeech. We observed a significant main effect of consonant class on activity along posterior to mid left STG, and a significant interaction between consonant class and syllable type (CV vs VC) in left posterior STS. These results suggest that initial speech-specific processing of auditory signals occurs beyond primary auditory areas, in the left STG and left STS.