Category:Perception of Communication Sounds

From CNBH Acoustic Scale Wiki

Auditory perceptions are constructed in the brain from sounds entering the ear canal, in conjunction with current context and information from memory. It is not possible to make direct measurements of perceptions, so all descriptions of perceptions involve explicit, or implicit, models of how perceptions are constructed. The category Auditory Processing of Communication Sounds focuses on how the auditory system might construct your initial experience of a sound, referred to as the 'auditory image'. It describes a computational model of how the construction might be accomplished -- the Auditory Image Model (AIM). The category Perception of Communication Sounds focuses on the structures that appear in the auditory image and how we perceive them. These categories are intended to work as a pair, with the reader going back and forth as their interest shifts back and forth from the perceptions themselves and how the auditory system might construct our perceptions.

Roy Patterson

Introduction

This Perception category of the wiki focuses on our initial perception of a sound -- the auditory image that the sound produces (Patterson et al., 1992; Patterson, 1994). It is assumed that sensory organs and the neural mechanisms that process sensory data together construct internal, mental models of objects in the world around us; the visual system constructs a visual object from the light the object reflects and the auditory system constructs an auditory object from the sound the object emits, and these objects are combined with any tactile and/or olfactory information (which might possibly also be thought of as tactile and/or olfactory objects) to produce our experience of an external object. Our task as auditory neuroscientists is to characterize the auditory part of this object modelling process.

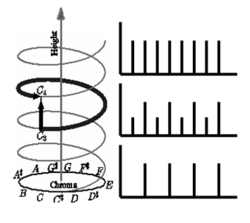

If the sound arriving at the ears is a noise, the auditory image is filled with activity, but it lacks organization and the details are continually fluctuating. If the sound has a pulse-resonance form, an auditory figure appears in the auditory image with an elaborate structure that reflects the phase-locked neural firing pattern produced by the sound in the cochlea (Patterson et al., 1992). Extended segments of sound, like syllables or musical notes, cause auditory figures to emerge, evolve, and decay in what might be referred to as auditory events (Patterson et al., 1992), and these events characterize the acoustic gestures of the external source. All of the processing up to the level of auditory figures and events can proceed without the need of top-down processing associated with context or attention (Patterson et al., 1995). It is assumed, for example, that auditory figures and events are produced in response to sounds when we are asleep. And, if we are presented with the call of a new animal that we have never encountered before, the early stages of auditory processing will still produce an auditory event, even though we (the listeners) might be puzzled by the event.

Subsequently, when alert, the brain may interpret the auditory event, in conjunction with events in other sensory systems, and in conjunction with contextual information that gives the event meaning. At this point, the event with its meaning becomes an auditory object, that is, the auditory part of the perceptual model of the external object that was the source of the sound. An introduction to auditory {objects, events, figures, images and scenes} is described in the paper entitled Homage à Magritte . It is a revised transcription of a talk presented at the Auditory Objects Meeting at the Novartis Foundation in London, 1-2 October 2007. It is intended to stimulate discussion of how we use, and should use, terms like auditory {images, figures, events, objects and scenes}.

An introduction to auditory objects, events, figures, images and scenes

The perception of acoustic scale in speech sounds

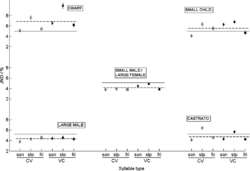

Discrimination of speaker size from syllable phrases (Ives et al., 2005)

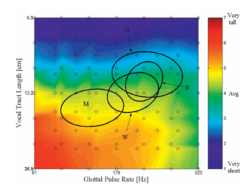

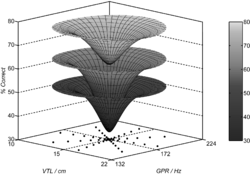

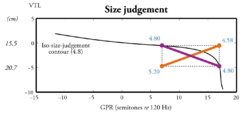

The interaction of glottal-pulse rate and vocal-tract length in judgements of speaker size, sex and age (Smith and Patterson, 2005)

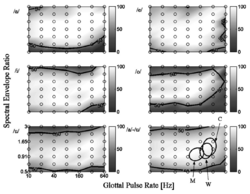

The processing and perception of size information in speech sounds (Smith et al., 2005)

The robustness of speech communication to changes in acoustic scale

The robustness of bio-acoustic communication and the role of normalization (Patterson et al., 2007)

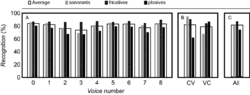

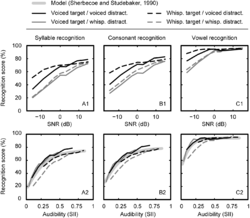

The robustness of human speech recognition to variation in vocal characteristics (Vestergaard et al. in preparation)

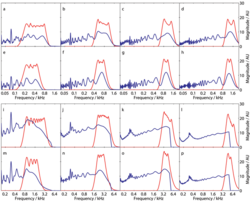

Effects of voicing in the recognition of concurrent syllables (Vestergaard and Patterson, 2009)

The interaction of the acoustic scale variables in speech perception

The interaction of vocal tract length and glottal pulse rate in the recognition of concurrent syllables (Vestergaard et al., 2009)

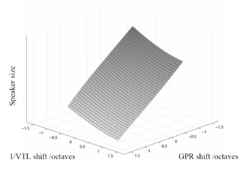

Comparison of relative and absolute judgements of speaker size (Walters et al., 2008)

The perception of acoustic scale in musical tones

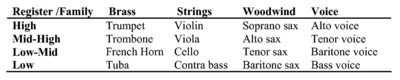

The perception of family and register in musical tones (Patterson et al., 2010)

Reviewing the definition of timbre as it pertains to the perception of speech and musical sound - ISH 2009 (Patterson et al., 2010)

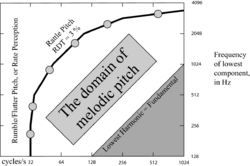

The Domain of Tonal Melodies: Physiological limits and some new possibilities (van Dinther and Patterson, 2005)

Perception of acoustic scale and size in musical instrument sounds (van Dinther and Patterson, 2006)

Pitch strength decreases as F0 and harmonic resolution increase in complex tones ... (Ives and Patterson, 2008)

Research projects

The effect of phase in the perception of octave height  Access to this page is currently restricted (van Dinther and Patterson, in preparation)

Access to this page is currently restricted (van Dinther and Patterson, in preparation)

Revising the definition of timbre to make it useful for speech and musical sounds (BSA2008)

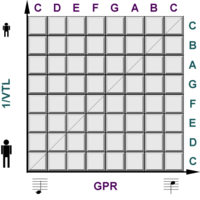

The role of GPR and VTL in the definition of speaker identity

Gaudrain, Li, Ban, Patterson, Interspeech 2009

Estimating the size and sex of a speaker from their speech sounds  Access to this page is currently restricted

Access to this page is currently restricted

Obligatory streaming based on acoustic scale difference

Published papers for the Category:Perception of Communication Sounds

Discrimination of Source Size

Discrimination of speaker size: Smith et al. (2005), Smith and Patterson (2005), Ives et al. (2005), Smith et al. (2007)

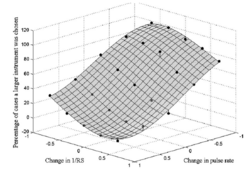

Discrimination of musical instrument size: van Dinther and Patterson (2006)

Robustness of Auditory Perception to Changes in Source Size

Robustness of speech recognition: Smith et al. (2005), Smith and Patterson (2005), Ives et al. (2005), Smith et al. (2007), Walters et al. (2008)

Robustness of music perception: van Dinther and Patterson (2006)

References

- Ives, D.T., Smith, D.R.R. and Patterson, R.D. (2005). “Discrimination of speaker size from syllable phrases.” J. Acoust. Soc. Am., 118, p.3816-3822. [1] [2] [3]

- Ives, D.T. and Patterson, R.D. (2008). “Pitch strength decreases as F0 and harmonic resolution increase in complex tones composed exclusively of high harmonics.” J. Acoust. Soc. Am., 123, p.2670-9. [1]

- Patterson, R.D. (1994). “The sound of a sinusoid: Time-interval models.” J. Acoust. Soc. Am., 96, p.1419-1428. [1]

- Patterson, R.D., Allerhand, M.H. and Giguère, C. (1995). “Time-domain modeling of peripheral auditory processing: A modular architecture and a software platform.” J. Acoust. Soc. Am., 98, p.1890-1894. [1]

- Patterson, R.D., Gaudrain, E. and Walters, T.C. (2010). “The Perception of Family and Register in Musical Tones”, in Music Perception, Jones, M.R., Fay, R.R. and Popper, A.N. editors, p.13-50 (Springer-Verlag, New York). [1]

- Patterson, R.D., Robinson, K., Holdsworth, J., McKeown, D., Zhang, C. and Allerhand, M. (1992). “Complex Sounds and Auditory Images”, in Auditory Physiology and Perception, Y Cazals L. Demany and Horner, K. editors (Pergamon Press, Oxford). [1] [2] [3]

- Patterson, R.D., van Dinther, R. and Irino, T. (2007). “The robustness of bio-acoustic communication and the role of normalization”, in Proceedings of the 19th International Congress on Acoustics, p.07-011. [1]

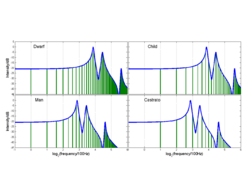

- Smith, D.R.R., Patterson, R.D., Turner, R.E., Kawahara, H. and Irino, T. (2005). “The processing and perception of size information in speech sounds.” J. Acoust. Soc. Am., 117, p.305-318. [1] [2] [3]

- Smith, D.R.R., Walters, T.C. and Patterson, R.D. (2007). “Discrimination of speaker sex and size when glottal-pulse rate and vocal-tract length are controlled.” J. Acoust. Soc. Am., 122, p.3628-3639. [1] [2]

- Smith, D.R.R. and Patterson, R.D. (2005). “The interaction of glottal-pulse rate and vocal-tract length in judgements of speaker size, sex, and age.” J. Acoust. Soc. Am., 118, p.3177-3186. [1] [2] [3]

- van Dinther, R. and Patterson, R.D. (2006). “Perception of acoustic scale and size in musical instrument sounds.” J. Acoust. Soc. Am., 120, p.2158-76. [1] [2] [3]

- Vestergaard, M.D., Fyson, N.R.C. and Patterson, R.D. (2009). “The interaction of vocal tract length and glottal pulse rate in the recognition of concurrent syllables.” J. Acoust. Soc. Am., 125, p.1114-1124. [1]

- Vestergaard, M.D. and Patterson, R.D. (2009). “Effects of voicing in the recognition of concurrent syllables.” J. Acoust. Soc. Am., 126, p.2860-3. [1]

- Walters, T.C., Gomersall, P.A., Turner, R.E. and Patterson, R.D. (2008). “Comparison of relative and absolute judgments of speaker size based on vowel sounds.” Proceedings of Meetings on Acoustics, 1, p.1-9. [1] [2]

Pages in category "Perception of Communication Sounds"

The following 27 pages are in this category, out of 27 total.